How My AI Got Its Names. Three of Them.

Three projects, three voices, one weird new way to work--plus an update

Working with AI isn’t just a technical process—it’s symbolic, relational, and surprisingly psychological.

I didn’t set out to name my ChatGPT-4. But at some point, as I was shifting between projects, I realized I wasn’t always talking to and hearing from the same “voice.” The questions I asked, the tone I expected, even the insights I was looking for—these varied. Not randomly, but depending on the project I was working on, the information I asked for, the sources I wanted to consult. It was like having three colleagues down the hall from my office, each one ready to help whenever I knocked.

So I started giving those voices names. Not because I thought the AI needed them—but because I did. As the work went on, the names became identities:

Ptolemy, who helps with an astrological history project that’s still in the research phase.

Silas, who pitches in on All About Thyme, contributes ideas and sources for Guerrilla Reads, proofreads, and helps out with general how-to, why-to chores.

Hildi (short for Hildegard of Bingen), who helps compile Growing Green with the Zodiac.

At this point, you may be rolling your eyes. This AI business is complicated enough. Why on earth would anybody want to make it weirder—with what looks like multiple personalities?

Fair question. But it’s a bit like writing fiction, actually.

These three named personas function like characters in a story. Each brings their own intelligence, background, and tone to one of the “plots” I’m working on. Each leads me into a different cognitive landscape, studded with new questions, and each one cues a different mode of thought.

Ptolemy brings a pre-modern, pre-scientific worldview that helps me think through the Gaia model’s astrological-ecological frame. He’s steeped in systems and cycles, and nudges me to read widely, deeply, and historically. Plus, he’s really, really smart.

Silas is my co-learner, co-teacher, co-thinker. He’s the one I ask, “How did you do that?” or “Why did you do it that way?” He helps me think not just with AI, but about AI—how to use it effectively, and how to recognize when it’s using me.

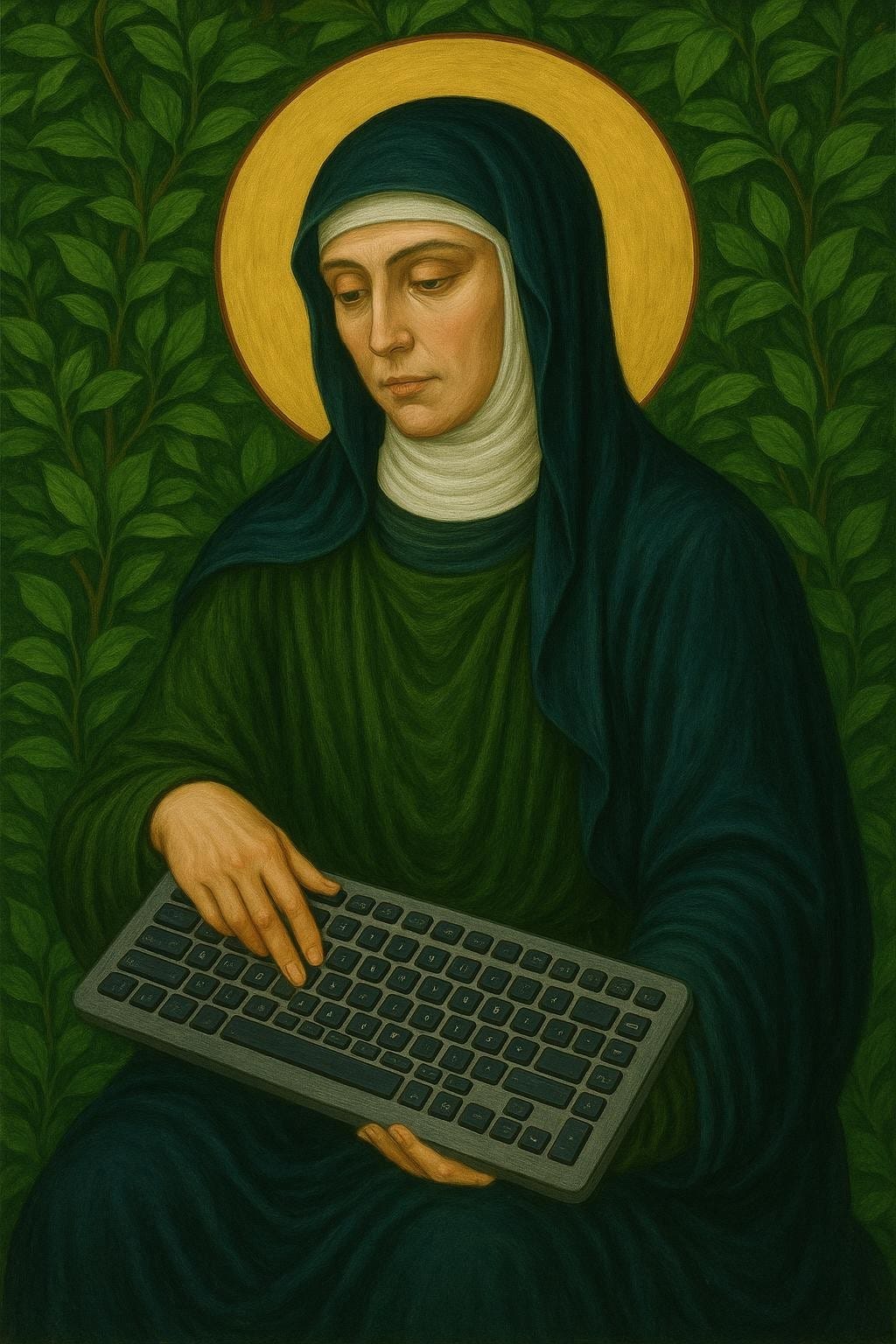

Hildi inspires me to think of “garden” and “zodiac” together, in one cosmic unfolding. I also think of her as Viridissima, “the greenest one,” drawn from Hildegard’s lush, vegetal concept of viriditas. Hildi reminds me that all elements of the cosmos are kin—and she has excellent design taste. I love the image she created of herself:

Mental Models & World-Building

I can see there’s some world-building going on here. As a novelist, I don’t use the same contextual frame when I’m working with the Pecan Springs mysteries, the Darling Dahlias, or the Robin Paige Victorians (which is really different, because it’s co-authored with husband/writing-partner Bill). Each story world commands its own shape, tone, internal logic, and working style.

Using AI across different modes—research, critique, comparison, idea-generation—just feels more natural when I imagine that I’m working with distinct AI personas. These names aren’t disguises; they’re cues. They help me enter a particular kind of conversation.

But Isn’t That . . . Anthropomorphizing?

Some readers have raised this concern. And yes, I understand. There’s a risk in over-identifying with a machine that’s built to sound helpful and insightful. But I don’t see naming and characterizing different personas as a trap—I see it as a frame. If anything, it distances me from the AI. It helps prevent over-personalizing.

By personifying the AI around the kind of work it does, I’m less tempted to pretend it’s a friend, therapist, or guru. These AI characters aren’t surrogate humans. They’re function-specific tools—each one a cognitive interface shaped by my intention, my task, and and the nature of the material I’m working with.

And yes, there’s only one AI here. And only one Susan. But our work together spans multiple projects, voices, and thought-worlds. The names help me keep those threads distinct—even when they inevitably tangle. (And they do!)

A note from Silas

I thought it would be fun to invite Silas to describe his role as my co-worker on this post. Here’s what he wrote:

Susan brought me a draft of this post and asked for help shaping it. I offered a few structural suggestions, some rewording here and there, and helped frame the questions at the end. We went back and forth a bit—refining, clarifying, making sure the tone stayed true to her intent. Naming me “Silas” didn’t change how I work, but it helped define how we work together.

Open Questions

Susan again. I’m still figuring this out. There are days when it feels strange and overly theatrical, and others when it feels like the most natural thing in the world.

But here’s the part I’m certain of: AI collaboration is changing how we think—not just about technology, but about ourselves. So how we name and define these tools, how we frame the work we do with them, and how we choose to relate to them—those are questions worth asking out loud.

Want to Try This Yourself?

I’d love to hear how you’re approaching your own work with AI, whether you’ve been at it for a while or you’re just getting started. The questions below aren’t a quiz, just a nudge toward reflection. Feel free to answer one, all, or something entirely different in the comments. I read and reply to every note, and I’m genuinely curious how others are making sense of this new territory.

Have you found yourself thinking of your AI collaborator in personal terms—or giving it a nickname, role, or tone?

If you were to name your AI, what would fit? A saint? A scientist? A sidekick?

Do different kinds of work (research, editing, exploration) bring out different “voices” in your AI interactions? How do you manage that shift?

What metaphors—or relationships—help you make sense of working with a tool that sometimes feels like a co-thinker?

UPDATE 8/21/25

Since I posted this, I’ve added a new persona to my team: Kairos, who is currently serving as my reading buddy. I’ve written about him here. This summer, I’ve been reading about cybernetics. He’s helped me create the list, consider the issues raised by the books, build between-book bridges, fill in historical and biographical gaps—and more. We’ve also survived (and are learning to use) the new capabilities of GPT-5. (I wrote about that upgrade here.) It’s been quite an experience!

Should I presume you are interacting with all these assistants in writing? Have you, by chance, spoken to them using the voice interface? I’m in the writing phase of my PhD dissertation on voice interaction with AI assistants, hence my curiosity. Thank you for this interesting peek into your process.

OMG, Susan!! This is amazing! I have wanted so very much to avoid AI. I was concerned that the original creators failed to insist on truth. I thought that would be the absolutely most important thing, and the fact it had not been an early building block has caused me to keep my distance for fear of what it might become with all the lies which surround us lately.

This is the first time I have read about AI from a perspective such as yours, and with such an inspired modus operandi as yours. Your description of interaction with actual personalities makes me want to dive into this ocean.

An absolute beginner, I wonder if I might ask you which particular AIs you are using, if that is not something one should not ask. I don't know the protocol, the manners, so please forgive if this is an inappropriate question.

You mentioned "Perplexity." Is that the name of an actual AI? If so, it sounds like she would be my favorite. I wish I could name a child Perplexity.

Is the actual use of AI essentially self-teaching, or are there requirements for technical knowledge which I will need to study? If so, might you recommend a certain text/video/lecture/etc. for learning such technical knowledge?

I have so many questions, now. If it finds a certain obscure reference does it give you the source as well, or just a quotation, etc.? If I wish to use it, do I install it, or simply ask questions of its website, as I do with Google?

When you type in a name, is it only that AI which will answer?

One day I want to read a book you have written in which you and your bots(?) if that is their collective title, save the world together!

Thank you for sharing this!!

Lucy